This article is a continuation of the headless content delivery series.

I have read a few descriptions about headless CMS that mention content aggregation as a key benefit. I feel that this is a bit of a reach as content aggregation — sourcing content from several disparate sources, normalising the content, and then making it available to consumers — is a pattern that decoupled and headless CMS can implement equally well. In fairness, content aggregation at the point of consumption, i.e. in the browser or app, from disparate sources would be headless by definition.

Having said that, the reason this does come up in the headless context is that a core principle of the headless content approach is a focus on structure and not on publishing artifacts such as pages. Good structures are a good starting point for content aggregation as you’re more likely to have or need content is a defined structure that you can map other source content to.

With that in mind, let's take a closer look at the two critical components: sourcing the data, and data transformation.

Sourcing Data

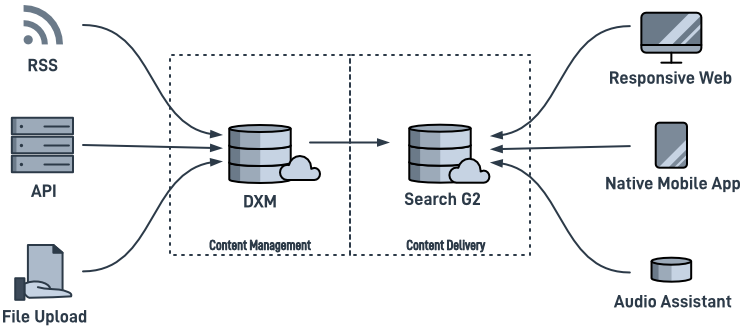

There are a couple of ways to import information into Crownpeak DXM. Both are pull approaches, meaning that DXM will retrieve information from a source rather than having the information pushed to it. These are:

- SFTP Imports; and

- Web Imports

SFTP Imports

The SFTP import mechanism works by having DXM connect to an SFTP server, perform a directory listing, match a token in the filename with an SFTP configuration, and trigger a template handler to do the import.

The import works best on text-based resources that have some kind of structure although binary imports such as images can also be handled. Commons text formats used for these integrations are delimited formats (comma, pipe or tag separated variable); or structured formats like JSON and XML.

The template handler that is invoked (ftp_import.aspx) is where we’re going to do our content transformation, mapping from the source format and fields to the desired target fields. The template code will pick up the source data from the FTPBody property on the FtpInputContext. This can be parsed manually or by using Template API utility methods like Util.CreateListFromCsv, Util.DeserializeDataContractJson and Util.DeserializeDataContractXml.

See Setting up SFTP Imports for a detailed step-by-step guide to getting this working.

Web Imports

Pulling information from an external system through a web API of some description is another common data import strategy.

This can be accomplished in a number of ways but the way I would recommend is using a workflow that has:

- a timeout transition; and

- executes an import script on entry to a workflow state.

An alternative that is easier to get started with is to have an asset in a workflow with a schedule transition. The schedule can be set up with a “publish” frequency ranging from hourly, daily, weekly or monthly. This is easier because there is only one template to write and if you use the CS Developer template, the asset is the template!

Transforming Data

Data transformation can be done in many different ways but ultimately it comes down to code. The key here is to bring in the data from your external sources and map, generate or transform that into one or more data structures that your downstream consumers will be using. The simplest transformation would be the identity transform — mapping the source data one-to-one with fields for downstream use.

If you are planning to use Search G2 as the enabling service for headless content delivery, the minimum transformation required is to map your source data to search fields. This is particularly important when you take into account the fact search documents are field-value pairs so any nested or hierarchical structures will probably need to be flattened.

Implementing on Crownpeak DXM

Once you have the content in your required structure, the content delivery approach is the same as we’ve talked about previously, namely publishing content into Search G2 and using the query API for content delivery.